Even if you stay free of Alzheimer's disease, the normal aging process is fairly destructive to your brain. Neurons disappear, connections lose their strength, protein gunk builds up, and the whole brain shrinks. Areas controlling learning and memory are among the hardest hit. A new study claims that our crumbling brains aren't just a fact of normal aging. Instead, they may be unique in the animal kingdom, the result of an evolutionary bargain our species has struck.

Chet Sherwood at George Washington University led the study, which put humans and captive chimpanzees of various ages through MRI scanners. The humans ranged from ages 22 to 88. Chimps were between 10 and 45 years old, because 45 years is about as long as chimps can live in the wild (more on that in a moment).

In humans, the researchers found a pattern of decreasing brain volume throughout life that accelerated into old age. That pattern was missing in chimpanzees, whose brains seemed to maintain a consistent size.

Chimpanzees were used because they're our closest living relatives; we've been apart for only about 6 million years of evolution. The authors reason that because chimps' brains don't shrink as they age, our own brain degeneration must be a product of our recent evolution. We've developed brains that are big and energy-hungry, and to judge from our global population size, throwing our resources into our noggins seems to have been a good evolutionary strategy.

Since splitting from our ape relatives, we've also evolved longer life spans. Women, in particular, are a curiosity because they can live decades past their fertile years. Evolutionary biologists have hypothesized that keeping infertile elderly women around is no accident, because these grandmothers can bolster the success of their own genes by helping to take care of their grandchildren. The authors of the chimp study suggest that these helpful grandmothers are to blame for our degenerating brains: we've evolved long lifespans and brains that can't quite keep up.

The grandmother hypothesis, though, is hard to prove. And though 45 is elderly for a chimpanzee in the wild, the authors acknowledge that chimps under medical care in captivity can live into their 60s. Is a human today who lives into her 80s, thanks to medical care and disease prevention, comparable to a chimp in the wild? Or is a human "in the wild" better represented by someone in a southern African country with a life expectancy in the 30s or 40s?

If this study included chimpanzees at the true upper end of their age potential, it might provide more insight. The authors acknowledge that some previous studies have shown different results; for example, a study of brain mass that included chimpanzees up to age 59 did find some shrinkage with age.

The authors assume our damaging brain decline is a byproduct of evolution, but don't ask whether it might come from extending our life spans even further than evolution intended. Some perspective might come from studying another animal that no longer lives "in the wild": domestic dogs. Wolves live six to eight years in the wild, but many kinds of pet dogs can live for twice that long.

Even though they're not close to us in evolutionary terms, dogs age much like humans do. Their brains shrink in old age, especially in the prefrontal cortex and the hippocampus--the same areas that are particularly vulnerable in humans. Dogs develop cognitive problems and behavioral changes. Their brains even accumulate deposits of amyloid-beta, the protein gunk that appears in humans and is linked to Alzheimer's disease. Maybe our aging brains are not only the result of our exceptional smarts, then, but also of our domestication.

Sherwood, C., Gordon, A., Allen, J., Phillips, K., Erwin, J., Hof, P., & Hopkins, W. (2011). Aging of the cerebral cortex differs between humans and chimpanzees Proceedings of the National Academy of Sciences DOI: 10.1073/pnas.1016709108

Pages

▼

With Great Stature Comes Great...er Cancer Risk

Bad news, tall people: Your extra inches are increasing your likelihood of developing cancer. And the taller you are, the worse it is. Here's what that means for you, your sunscreen use, and the Dutch.

A study in the UK followed almost 1.3 million women for about 9 years. The women were middle-aged and had not had cancer before. Over the following years, researchers kept track of which women developed cancers. They also sorted the women into groups by height. What emerged was a tidy correlation: Compared to a very short woman (under 5 foot 1), every extra 10 centimeters in height increases your overall cancer risk by about 16%. At 5 foot 5, your cancer risk is about 20% greater than the short woman. And if you're 5 foot 9 or taller, you have a 37% greater chance of developing cancer than your 5-foot-tall friend.

Your height isn't totally independent from other variables in your life. Height is related to nutrition and health in childhood, and correlates with socioeconomic status. In addition to being wealthier, tall women in the study were more active, had fewer children, and were less likely to smoke or be obese (though they did drink more). However, when the researchers controlled for all of these variables--in addition to age, BMI, geography, and use of hormonal birth control--the height effect remained.

The researchers also analyzed 10 other, similar studies, applying to men and women on other continents. Overall, these studies came to almost the same conclusion: cancer risk went up by 14% for each 10 centimeters in height.

In the British study, some specific types of cancer had especially strong increases associated with height. Colon cancer, kidney cancer and leukemia risks increased by about 25% with every 10 centimeters in height, while the risk of malignant melanoma (skin cancer) increased by 32%.

Of course, tall people have more skin cells to start with. (And they're closer to the sun!) In fact, tall people have more cells overall. Is the height effect simply a cell-number effect? Does having more cells just create more opportunities for a cell to go rogue? It seems like the simplest explanation.

But the authors also wonder if higher circulating levels of growth hormones in childhood and adulthood, which are known to be linked to cancer, could be at fault. Another recent study found that Ecuadorians with a rare type of dwarfism, whose bodies don't respond to growth hormones, almost never get cancer. It's an intriguing hint that something more than mathematics is at work.

A 15% increase in risk can be more or less of a cause for alarm, depending on your starting point. If a certain type of cancer affects 1 in 100 people, a 15% increase means it now affects 1.15 out of 100 people: essentially, still just the one person. For something like breast cancer, which affects closer to 1 in 8 women, that 15% is more meaningful. Given all the other risk factors in an individual person's life, though--known and unknown--a 15% increase could be easily overwhelmed by other variables. Smoking, for example, increases your risk of lung cancer by something like 2,300%. Having a million-person study gave the authors the statistical power to find much smaller effects.

Even if the increase in risk is small, it might be noteworthy globally, given that the populations of many countries are growing taller as they become wealthier. The authors say that during the 20th century, heights throughout Europe increased by about 1 centimeter per decade. This factor alone might have increased cancer incidence by 10 or 15%.

Maybe we should stop feeling sore about the Dutch becoming the tallest people in the world while our own populace lags behind various Scandinavian nations. At least we don't have the top cancer rates! (While we're at it, we can stop blaming our cell phones, too.)

Green, J., Cairns, B., Casabonne, D., Wright, F., Reeves, G., & Beral, V. (2011). Height and cancer incidence in the Million Women Study: prospective cohort, and meta-analysis of prospective studies of height and total cancer risk The Lancet Oncology DOI: 10.1016/S1470-2045(11)70154-1

A study in the UK followed almost 1.3 million women for about 9 years. The women were middle-aged and had not had cancer before. Over the following years, researchers kept track of which women developed cancers. They also sorted the women into groups by height. What emerged was a tidy correlation: Compared to a very short woman (under 5 foot 1), every extra 10 centimeters in height increases your overall cancer risk by about 16%. At 5 foot 5, your cancer risk is about 20% greater than the short woman. And if you're 5 foot 9 or taller, you have a 37% greater chance of developing cancer than your 5-foot-tall friend.

Your height isn't totally independent from other variables in your life. Height is related to nutrition and health in childhood, and correlates with socioeconomic status. In addition to being wealthier, tall women in the study were more active, had fewer children, and were less likely to smoke or be obese (though they did drink more). However, when the researchers controlled for all of these variables--in addition to age, BMI, geography, and use of hormonal birth control--the height effect remained.

The researchers also analyzed 10 other, similar studies, applying to men and women on other continents. Overall, these studies came to almost the same conclusion: cancer risk went up by 14% for each 10 centimeters in height.

In the British study, some specific types of cancer had especially strong increases associated with height. Colon cancer, kidney cancer and leukemia risks increased by about 25% with every 10 centimeters in height, while the risk of malignant melanoma (skin cancer) increased by 32%.

Of course, tall people have more skin cells to start with. (And they're closer to the sun!) In fact, tall people have more cells overall. Is the height effect simply a cell-number effect? Does having more cells just create more opportunities for a cell to go rogue? It seems like the simplest explanation.

But the authors also wonder if higher circulating levels of growth hormones in childhood and adulthood, which are known to be linked to cancer, could be at fault. Another recent study found that Ecuadorians with a rare type of dwarfism, whose bodies don't respond to growth hormones, almost never get cancer. It's an intriguing hint that something more than mathematics is at work.

A 15% increase in risk can be more or less of a cause for alarm, depending on your starting point. If a certain type of cancer affects 1 in 100 people, a 15% increase means it now affects 1.15 out of 100 people: essentially, still just the one person. For something like breast cancer, which affects closer to 1 in 8 women, that 15% is more meaningful. Given all the other risk factors in an individual person's life, though--known and unknown--a 15% increase could be easily overwhelmed by other variables. Smoking, for example, increases your risk of lung cancer by something like 2,300%. Having a million-person study gave the authors the statistical power to find much smaller effects.

Even if the increase in risk is small, it might be noteworthy globally, given that the populations of many countries are growing taller as they become wealthier. The authors say that during the 20th century, heights throughout Europe increased by about 1 centimeter per decade. This factor alone might have increased cancer incidence by 10 or 15%.

Maybe we should stop feeling sore about the Dutch becoming the tallest people in the world while our own populace lags behind various Scandinavian nations. At least we don't have the top cancer rates! (While we're at it, we can stop blaming our cell phones, too.)

Green, J., Cairns, B., Casabonne, D., Wright, F., Reeves, G., & Beral, V. (2011). Height and cancer incidence in the Million Women Study: prospective cohort, and meta-analysis of prospective studies of height and total cancer risk The Lancet Oncology DOI: 10.1016/S1470-2045(11)70154-1

What Marathoner Mice Can Teach Us

If someone left a treadmill in your living room, how far would you run every day just because you felt like you had some energy to burn? Five miles? Zero miles, and you'd use it as a tie rack? How about 65 miles?

Researchers at the University of Pennsylvania and elsewhere studied mutant mice that were missing a particular gene involved in cell signaling. They thought the gene had something to do with muscle development, and sure enough, they found that these mice had some pretty definite abnormalities in their muscles. For example, when an exercise wheel was put in their cages, the mice ran and ran. The wheels were rigged to devices that counted the number of spins they took, and the researchers converted that number to a distance. They found that during just one night (the active period for mice), mutant mice ran an average of 5.4 kilometers on their wheels.

(5K is a much longer haul for a mouse than a human. How much longer? A very rudimentary calculation* tells me that the distance run by the mutant mice each night is roughly proportional to 65 miles for a human. That's not to say that the effort expended would be proportional--I'm no expert on the mechanics of mouse locomotion, and having four legs probably changes things. But that's about how far the same number of strides would take us.)

Even when they weren't on their exercise wheels, the mutant mice were more active, constantly scurrying around their cages. To find out what let the mice stay so active, the researchers took muscles** out of their legs and examined them. Muscles are often described as fast-twitch, used for quick bursts of activity, or slow-twitch, better suited for aerobic exercise. When the researches contracted the cut-out muscles electrically, muscles that should have been fast-twitch fatigued more slowly than usual. Under a microscope, those same muscles contained more muscle fibers and more mitochondria (the cellular powerhouses). Overall, the fast-twitch muscles now looked like slow-twitch ones.

The mutant mice were also skinnier than normal mice, which isn't much of a surprise.

One wonders if these mice, now overly suited for marathoning, were worse at fast-twitch activities such as sprinting or bench-pressing. Sadly, the authors didn't set up any mouse decathlons to find out. But they did look for related genetic variations in humans.

You can't remove a gene from a person like you can from a mouse (at least, ethics boards would probably frown upon it), but you can look for mutations that already exist in human DNA. Conveniently, the gene that was studied in the marathoner mice exists in a few different variants in humans. The researchers looked at DNA from 209 elite athletes in 11 different sports. When broken down by sport, some of the groups displayed distinct genetic profiles. Cyclists, for example, were more likely than usual to have a certain variant of the gene--while triathletes and elite rowers were more likely to have another variant.

In humans, as in mice, the gene in question seems to be involved in how muscles develop. The authors speculate that further research on this gene could help people with muscular diseases, or the obese or elderly. Increasing a person's muscular endurance could help them to lose weight or to keep active in old age. (It could also help professional cyclists cheat, as if they needed any help in that area.)

It's still not clear how a mutant muscle type affects a mouse's or human's motivation to move. The mice in the study weren't put on wheels and forced to run until they collapsed; they voluntarily got up and ran a 5K every night because they felt like it. Maybe this, too, will be a key insight into obesity--the condition of your muscles may not be independent from your desire to exercise. Something at a cellular level told the mice to just keep moving. If we could tap into that force in our own bodies, we might all be able get ourselves off the couch and onto the exercise wheel.

*I converted the distance into strides using this site's measurement of mouse stride length (moving at average speed) and this site's reported stride length for a female marathoner. Better calculations, or ideas about how to compare distances between small four-legged animals and tall bipeds, are welcome.

**Linguistic point of interest: "Muscle" comes from the Latin for "little mouse."

Pistilli, E., Bogdanovich, S., Garton, F., Yang, N., Gulbin, J., Conner, J., Anderson, B., Quinn, L., North, K., Ahima, R., & Khurana, T. (2011). Loss of IL-15 receptor α alters the endurance, fatigability, and metabolic characteristics of mouse fast skeletal muscles Journal of Clinical Investigation DOI: 10.1172/JCI44945

Researchers at the University of Pennsylvania and elsewhere studied mutant mice that were missing a particular gene involved in cell signaling. They thought the gene had something to do with muscle development, and sure enough, they found that these mice had some pretty definite abnormalities in their muscles. For example, when an exercise wheel was put in their cages, the mice ran and ran. The wheels were rigged to devices that counted the number of spins they took, and the researchers converted that number to a distance. They found that during just one night (the active period for mice), mutant mice ran an average of 5.4 kilometers on their wheels.

(5K is a much longer haul for a mouse than a human. How much longer? A very rudimentary calculation* tells me that the distance run by the mutant mice each night is roughly proportional to 65 miles for a human. That's not to say that the effort expended would be proportional--I'm no expert on the mechanics of mouse locomotion, and having four legs probably changes things. But that's about how far the same number of strides would take us.)

Even when they weren't on their exercise wheels, the mutant mice were more active, constantly scurrying around their cages. To find out what let the mice stay so active, the researchers took muscles** out of their legs and examined them. Muscles are often described as fast-twitch, used for quick bursts of activity, or slow-twitch, better suited for aerobic exercise. When the researches contracted the cut-out muscles electrically, muscles that should have been fast-twitch fatigued more slowly than usual. Under a microscope, those same muscles contained more muscle fibers and more mitochondria (the cellular powerhouses). Overall, the fast-twitch muscles now looked like slow-twitch ones.

The mutant mice were also skinnier than normal mice, which isn't much of a surprise.

One wonders if these mice, now overly suited for marathoning, were worse at fast-twitch activities such as sprinting or bench-pressing. Sadly, the authors didn't set up any mouse decathlons to find out. But they did look for related genetic variations in humans.

You can't remove a gene from a person like you can from a mouse (at least, ethics boards would probably frown upon it), but you can look for mutations that already exist in human DNA. Conveniently, the gene that was studied in the marathoner mice exists in a few different variants in humans. The researchers looked at DNA from 209 elite athletes in 11 different sports. When broken down by sport, some of the groups displayed distinct genetic profiles. Cyclists, for example, were more likely than usual to have a certain variant of the gene--while triathletes and elite rowers were more likely to have another variant.

In humans, as in mice, the gene in question seems to be involved in how muscles develop. The authors speculate that further research on this gene could help people with muscular diseases, or the obese or elderly. Increasing a person's muscular endurance could help them to lose weight or to keep active in old age. (It could also help professional cyclists cheat, as if they needed any help in that area.)

It's still not clear how a mutant muscle type affects a mouse's or human's motivation to move. The mice in the study weren't put on wheels and forced to run until they collapsed; they voluntarily got up and ran a 5K every night because they felt like it. Maybe this, too, will be a key insight into obesity--the condition of your muscles may not be independent from your desire to exercise. Something at a cellular level told the mice to just keep moving. If we could tap into that force in our own bodies, we might all be able get ourselves off the couch and onto the exercise wheel.

*I converted the distance into strides using this site's measurement of mouse stride length (moving at average speed) and this site's reported stride length for a female marathoner. Better calculations, or ideas about how to compare distances between small four-legged animals and tall bipeds, are welcome.

**Linguistic point of interest: "Muscle" comes from the Latin for "little mouse."

Pistilli, E., Bogdanovich, S., Garton, F., Yang, N., Gulbin, J., Conner, J., Anderson, B., Quinn, L., North, K., Ahima, R., & Khurana, T. (2011). Loss of IL-15 receptor α alters the endurance, fatigability, and metabolic characteristics of mouse fast skeletal muscles Journal of Clinical Investigation DOI: 10.1172/JCI44945

Human Jell-O (Endorse THAT, Bill Cosby)

Marshmallows, gummy bears, and Jell-O are all made jiggly by gelatin, an ingredient that comes from processed leftover pig and cow parts. If this troubles you, you may be glad to hear that some researchers are working on an alternative to animal-derived gelatin. Namely, gelatin that's human-derived.

Gelatin is made by breaking down collagen, a protein that's plentiful in mammals' connective tissues. Collagen's ropy molecules run through bones, skin, and tendons. Spare bones and hides from the meat industry (but not hooves, despite what you may have heard) are collected and processed to make gelatin. Collagen holds people together; gelatin holds Peeps together.

Gelatin also shows up in cosmetics (as "hydrolyzed animal protein"), pharmaceuticals (in vaccines and gel capsules), and paintballs (like an oversized Nyquil full of hurt). But gelatin's not a perfect product. The manufacturing process creates molecules of varying sizes, not the consistent product that the pharmaceutical industry would prefer. The animal proteins can trigger immune reactions in humans. And like any process that involves repurposing animal scraps, gelatin manufacturing carries a slight risk of spreading infections such as mad cow disease.

A group of researchers in China, in search of a better gelatin, have turned to humans. Rather than boiling up some human bones, they engineered yeast that can make human gelatin from scratch. The researchers inserted a set of human genes into yeast cells, and the cells used their own machinery to manufacture human collagen and process it into gelatin. Though the result had never been inside a human, it was human gelatin.

If this technique catches on, it could be a safer and more efficient way to make the gelatin we use in drugs and vaccines. It's hard to say whether human gelatin could ever catch on in the food industry, though. Gummy bears made with pig protein (or Twinkies made with beef fat) are one thing, but gelatin made from human genes--THAT would be gross.

Duan, H., Umar, S., Xiong, R., & Chen, J. (2011). New Strategy for Expression of Recombinant Hydroxylated Human-Derived Gelatin in Pichia pastoris KM71

Journal of Agricultural and Food Chemistry, 59 (13), 7127-7134 DOI: 10.1021/jf200778r

Gelatin is made by breaking down collagen, a protein that's plentiful in mammals' connective tissues. Collagen's ropy molecules run through bones, skin, and tendons. Spare bones and hides from the meat industry (but not hooves, despite what you may have heard) are collected and processed to make gelatin. Collagen holds people together; gelatin holds Peeps together.

Gelatin also shows up in cosmetics (as "hydrolyzed animal protein"), pharmaceuticals (in vaccines and gel capsules), and paintballs (like an oversized Nyquil full of hurt). But gelatin's not a perfect product. The manufacturing process creates molecules of varying sizes, not the consistent product that the pharmaceutical industry would prefer. The animal proteins can trigger immune reactions in humans. And like any process that involves repurposing animal scraps, gelatin manufacturing carries a slight risk of spreading infections such as mad cow disease.

A group of researchers in China, in search of a better gelatin, have turned to humans. Rather than boiling up some human bones, they engineered yeast that can make human gelatin from scratch. The researchers inserted a set of human genes into yeast cells, and the cells used their own machinery to manufacture human collagen and process it into gelatin. Though the result had never been inside a human, it was human gelatin.

If this technique catches on, it could be a safer and more efficient way to make the gelatin we use in drugs and vaccines. It's hard to say whether human gelatin could ever catch on in the food industry, though. Gummy bears made with pig protein (or Twinkies made with beef fat) are one thing, but gelatin made from human genes--THAT would be gross.

Duan, H., Umar, S., Xiong, R., & Chen, J. (2011). New Strategy for Expression of Recombinant Hydroxylated Human-Derived Gelatin in Pichia pastoris KM71

Journal of Agricultural and Food Chemistry, 59 (13), 7127-7134 DOI: 10.1021/jf200778r

Cold-Blooded but Not Dumb

Just because an animal has a base-model brain and can't regulate its own body temperature doesn't mean it's unintelligent. Recent news shows two cold-blooded animals, a fish and a lizard, cleverly solving problems--and giving us brainier animals reason to question our superiority.

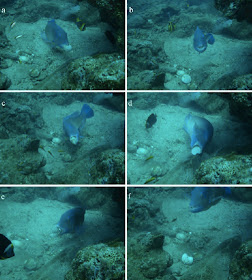

Swimming back from a 60-foot dive in the Great Barrier Reef, a diver "heard a cracking noise" and turned to see a fish exhibiting a surprising behavior. The fish was a black spot tuskfish, also called a green wrasse, and it was holding a cockle in its mouth while hovering just above a rock. While the diver snapped pictures, the fish spent well over a minute rolling its body side to side and whacking the shellfish against the rock's surface. Finally, the shell broke, leaving its inhabitant available for eating.

Though there have been reports of fish smashing food against coral or rocks before, no one's documented this behavior in the wild until now.

Jones, A., Brown, C., & Gardner, S. (2011). Tool use in the tuskfish Choerodon schoenleinii? Coral Reefs DOI: 10.1007/s00338-011-0790-y

Leal, M., & Powell, B. (2011). Behavioural flexibility and problem-solving in a tropical lizard Biology Letters DOI: 10.1098/rsbl.2011.0480

Swimming back from a 60-foot dive in the Great Barrier Reef, a diver "heard a cracking noise" and turned to see a fish exhibiting a surprising behavior. The fish was a black spot tuskfish, also called a green wrasse, and it was holding a cockle in its mouth while hovering just above a rock. While the diver snapped pictures, the fish spent well over a minute rolling its body side to side and whacking the shellfish against the rock's surface. Finally, the shell broke, leaving its inhabitant available for eating.

Though there have been reports of fish smashing food against coral or rocks before, no one's documented this behavior in the wild until now.

The Australian authors of this paper say that the wrasse's action meets Jane Goodall's definition of tool use: "the use of an external object as a functional extension of mouth or beak, hand or claw." However, Goodall later added* that a tool must be "held." Smashing a shell against a rock (or using the rock as an "anvil," as the authors generously put it) doesn't fit Goodall's definition.

Despite a bit of overstatement by the authors, the wrasse's behavior is a not a bad trick for a fish. In another paper, a group of anole lizards demonstrated that they could solve a problem as well as--or better than--birds can.

Researchers from Duke University challenged six emerald anole lizards with a find-the-larva task. In each trial, a lizard was presented with a platform holding two wells, inside one of which was a freshly killed larva (mm!). To train the lizards, scientists first put the larvae inside an uncovered well. Then they partially covered the larva-containing well with a blue lid, so the lizards learned that the blue cover was always near a treat.

Next, the researchers gave the trained lizards the same apparatus, but covered the larva tightly with its blue lid. Four out of the six lizards figured out how to get the lid off, either by biting its edge or by levering it off with their snouts. (The other two lizards failed to come up with an idea besides pouncing on top of the lid.)

The lizards that passed this challenge were given another one: Now that they knew how to get the lids off the wells, could they choose the right well when given two options? The researchers put the larva under the blue cover and put a similar-looking cover--blue with a yellow outline--on the second well. The lizards weren't fooled, and all repeatedly chose the correct lid to pry off.

Finally, the researchers swapped the larvae to the blue-and-yellow wells and gave the lizards six chances to figure it out. Two lizards succeeded, while the other two kept pulling off the blue lid until they gave up.

This is impressive, the authors say, because lizards aren't social animals or sly predators that need a lot of brainpower to get by. They catch food by sitting around and waiting for something edible to walk in front of them. Nevertheless, some of the lizards were able to solve these puzzles. A similar test has been given to birds, the authors say, which are usually thought of as cleverer animals--but the lizards actually needed fewer attempts to pass the test than birds did.

It's unlikely that any ectotherms will give us a serious challenge at the top of the cerebral heap, but we might have to expand our definition of animal intelligence to include creatures such as fish and lizards. At least two lizards, anyway.

*Source: page 2 of this book, which has a pretty hilariously wrong cover attached to it on Google Books.

Jones, A., Brown, C., & Gardner, S. (2011). Tool use in the tuskfish Choerodon schoenleinii? Coral Reefs DOI: 10.1007/s00338-011-0790-y

Leal, M., & Powell, B. (2011). Behavioural flexibility and problem-solving in a tropical lizard Biology Letters DOI: 10.1098/rsbl.2011.0480

Is Bo Obama a Fraud?

A hypoallergenic dog, we're told, is one that politely keeps its dander to itself and makes the air safer for allergy sufferers to breathe. Yet a new study claims to have debunked the whole notion of the allergy-friendly dog. Is this fair?

Researchers from the Henry Ford Health System in Detroit studied a group of 173 homes that had both a baby and exactly one dog. After surveying each dog's owners about its breed, size, and how much time the dog spent indoors, the researchers collected a sample of dust from the floor of the baby's bedroom. They then measured the amount of dog allergen in each sample (the main allergy-causing ingredient in dog dander is a molecule called Can f 1).

The families' dogs came from 60 different breeds. Researchers divided these dogs into hypoallergenic and non-hypoallergenic groups and looked for a difference in the amount of allergen the dogs left on their floors. Since "hypoallergenic" is not a label that's been defined scientifically--the problem that drove this study in the first place--the researchers tried several different methods to group the dogs. In their looser groupings, they put any dog that turned up in an internet search for allergy-free animals into the hypoallergenic category. They tried including, or not including, mutts with one supposedly hypoallergenic parent. In the strictest grouping, they considered only purebreds defined by the American Kennel Club as hypoallergenic, and compared them to all other dogs.

No matter how they sliced it, though, the researchers couldn't come up with a significant difference in the allergen level between the homes of hypoallergenic and non-hypoallergenic dogs. Furthermore, only 10 homes had no detectable amounts of allergen in their dust samples, and none of these homes held hypoallergenic dogs. "We found no scientific basis to the claim hypoallergenic dogs have less allergen," author Christine Cole Johnson said in a press release. (The paper will be published online here.)

So have we all been lied to? Is this dog, famously invited into the First Family because it wouldn't trouble little Malia's allergies, part of a large-scale deception?

Charlotte E. Nicholas, M.P.H., Ganesa R. Wegienka, Ph.D., Suzanne L. Havstad, M.A., Edward M. Zoratti, M.D., Dennis R. Ownby, M.D., & Christine Cole Johson, Ph.D. (2011). Dog allergen levels in homes with hypoallergenic compared with nonhypoallergenic dogs American Journal of Rhinology & Allergy

Researchers from the Henry Ford Health System in Detroit studied a group of 173 homes that had both a baby and exactly one dog. After surveying each dog's owners about its breed, size, and how much time the dog spent indoors, the researchers collected a sample of dust from the floor of the baby's bedroom. They then measured the amount of dog allergen in each sample (the main allergy-causing ingredient in dog dander is a molecule called Can f 1).

The families' dogs came from 60 different breeds. Researchers divided these dogs into hypoallergenic and non-hypoallergenic groups and looked for a difference in the amount of allergen the dogs left on their floors. Since "hypoallergenic" is not a label that's been defined scientifically--the problem that drove this study in the first place--the researchers tried several different methods to group the dogs. In their looser groupings, they put any dog that turned up in an internet search for allergy-free animals into the hypoallergenic category. They tried including, or not including, mutts with one supposedly hypoallergenic parent. In the strictest grouping, they considered only purebreds defined by the American Kennel Club as hypoallergenic, and compared them to all other dogs.

No matter how they sliced it, though, the researchers couldn't come up with a significant difference in the allergen level between the homes of hypoallergenic and non-hypoallergenic dogs. Furthermore, only 10 homes had no detectable amounts of allergen in their dust samples, and none of these homes held hypoallergenic dogs. "We found no scientific basis to the claim hypoallergenic dogs have less allergen," author Christine Cole Johnson said in a press release. (The paper will be published online here.)

So have we all been lied to? Is this dog, famously invited into the First Family because it wouldn't trouble little Malia's allergies, part of a large-scale deception?

The headlines would have you believe so: There's No Such Thing as Hypoallergenic Dogs, they say. Hypoallergenic Dogs Are Just a Myth. Don't Waste Your Money.

The authors of the study say it's unclear how the idea of the hypoallergenic dog originated, though it was in the late 20th century that the concept became popular. In a previous study, researchers shaved hair off of dogs of several breeds and measured the allergen present. Those authors found that although there was significant variation between breeds, there was also plenty of variation between individual dogs. And they found that poodles, dogs commonly called hypoallergenic, had a high level of allergen on their fur compared to other breeds. Christine Johnson and her co-authors wanted a more meaningful measurement of how much allergen was in the atmosphere of dog-owning homes, so they collected dust from the floors rather than fur from the dogs themselves.

However, neither study measured the amount of allergen in the air, where it's actually inhaled by allergy sufferers. Allergen that's settled on the floor might be a good approximation of what was previously in the air--except that Can f 1, the allergen being measured, has two major sources: the fur and the saliva. Dogs that are heavy droolers, or ardent crumb seekers, could be depositing allergens all over the floor without necessarily impacting how much is in the air.

"Hypo-" means "less," not "none." The American Kennel Club says that dogs producing less dander "generally do well with people with allergies," and recommends 11 breeds to try. But this study didn't have a large enough sample size to look at individual breeds; it could only compare groups of dogs. So while the study didn't find an obvious difference between supposedly hypoallergenic dogs--as a group--and other dogs, the study did not show that there's no such thing as a hypoallergenic dog.

If you're looking for a dog that doesn't trigger your allergies, you'd be better off spending some time around the dog itself than relying on an internet categorization. The variation between individual dogs' dander production might be more important than their breeds, anyway. Whether or not some breeds are truly hypoallergenic, science owes it to the allergy-ridden to create useful tests and meaningful labels for dogs. No one should have to live in fear of Bo.

Charlotte E. Nicholas, M.P.H., Ganesa R. Wegienka, Ph.D., Suzanne L. Havstad, M.A., Edward M. Zoratti, M.D., Dennis R. Ownby, M.D., & Christine Cole Johson, Ph.D. (2011). Dog allergen levels in homes with hypoallergenic compared with nonhypoallergenic dogs American Journal of Rhinology & Allergy

Is Your Stress Affecting Your Future Grandkids?

In case you weren't worried yet about inadvertently damaging your children's and grandchildren's DNA, scientists in Japan have demonstrated precisely how that might be possible by stressing out some fruit flies.

You might think that once you've contributed sperm or egg to your offspring, its genetic destiny is set: you're free to mess up the kid psychologically or raise it exclusively on gluten-free Cheetos, but you can't do any harm to its DNA. You'd be wrong, though. Scientists have learned that factors in our environment can change our genes and our children's genes--without altering the letters of our DNA. This phenomenon is called epigenetics and it's mostly a mystery.

Some epigenetic effects have been linked to diet; for example, human mothers who diet during pregnancy might predispose their children to be heavier. For this study, scientists looked at the effect of stress on fly embryos. Specifically, they kept the embryos at an uncomfortably warm temperature for an hour at an early point in their development. This is called heat shock, and although your mother is unlikely to have briefly baked you while you were in utero, the effect of heat shock is the same as the effect of other stressors on the body.

Packed tightly into your cells' nuclei, your DNA is coiled and coiled and coiled again, like a disastrously tangled phone cord (remember phones with cords?). The fruit fly's DNA is the same way. But the coiling isn't random. Areas of DNA that are being used are more loosely coiled, your genome's way of bookmarking its most-read pages. The tighter clumps of DNA aren't easily read by our cells' machinery, and might include genetic gibberish or redundant information.

The Japanese researchers found that stressing the fly embryos disrupted the clumping of their DNA, which in turn changed what genes were read and used by the embryo during its life. And the effect went further than that: After the embryos grew into adult flies and mated, their own offspring inherited some of their mis-coiled DNA. This was true whether the parent flies were male or female. But by the next generation, the flies' DNA had reverted to its original packing style, the mistakes of the past generations somehow corrected.

The flies in this study were physically stressed. In this case, they were overheated as embryos, but that bodily stress could also have come in the form of infection or starvation. Emotional stress is a kind of physical stress too--in the long term, it's known to be harmful to our bodies. So if humans are anything like flies (don't laugh! The protein responsible for clumping flies' DNA is the same as a protein we have), physical or emotional stressors in our lives could re-package the DNA of children we don't even have yet.

It's also possible that inherited changes in how our DNA is clumped could be responsible for certain diseases. This could lead to exciting future studies--not to mention fruit flies with a good reason to be stressed.

*******

Clarification: As far as this study goes, the answer to the question "Is your stress affecting your future grandkids?" is "Possibly, if you are a pregnant woman." Since you're providing the environment for your embryo, your unborn child is like the stressed fly embryo and your future grandchildren are like the fly's children (though undoubtedly cuter). The paper doesn't address other mechanisms of epigenetic change that might apply to people other than pregnant women. But even for non-pregnant women, I would guess that your current lifestyle influences the kind of environment you'll provide for a future embryo.

*******

Seong, K., Li, D., Shimizu, H., Nakamura, R., & Ishii, S. (2011). Inheritance of Stress-Induced, ATF-2-Dependent Epigenetic Change Cell, 145 (7), 1049-1061 DOI: 10.1016/j.cell.2011.05.029

You might think that once you've contributed sperm or egg to your offspring, its genetic destiny is set: you're free to mess up the kid psychologically or raise it exclusively on gluten-free Cheetos, but you can't do any harm to its DNA. You'd be wrong, though. Scientists have learned that factors in our environment can change our genes and our children's genes--without altering the letters of our DNA. This phenomenon is called epigenetics and it's mostly a mystery.

Some epigenetic effects have been linked to diet; for example, human mothers who diet during pregnancy might predispose their children to be heavier. For this study, scientists looked at the effect of stress on fly embryos. Specifically, they kept the embryos at an uncomfortably warm temperature for an hour at an early point in their development. This is called heat shock, and although your mother is unlikely to have briefly baked you while you were in utero, the effect of heat shock is the same as the effect of other stressors on the body.

Packed tightly into your cells' nuclei, your DNA is coiled and coiled and coiled again, like a disastrously tangled phone cord (remember phones with cords?). The fruit fly's DNA is the same way. But the coiling isn't random. Areas of DNA that are being used are more loosely coiled, your genome's way of bookmarking its most-read pages. The tighter clumps of DNA aren't easily read by our cells' machinery, and might include genetic gibberish or redundant information.

The Japanese researchers found that stressing the fly embryos disrupted the clumping of their DNA, which in turn changed what genes were read and used by the embryo during its life. And the effect went further than that: After the embryos grew into adult flies and mated, their own offspring inherited some of their mis-coiled DNA. This was true whether the parent flies were male or female. But by the next generation, the flies' DNA had reverted to its original packing style, the mistakes of the past generations somehow corrected.

The flies in this study were physically stressed. In this case, they were overheated as embryos, but that bodily stress could also have come in the form of infection or starvation. Emotional stress is a kind of physical stress too--in the long term, it's known to be harmful to our bodies. So if humans are anything like flies (don't laugh! The protein responsible for clumping flies' DNA is the same as a protein we have), physical or emotional stressors in our lives could re-package the DNA of children we don't even have yet.

It's also possible that inherited changes in how our DNA is clumped could be responsible for certain diseases. This could lead to exciting future studies--not to mention fruit flies with a good reason to be stressed.

*******

Clarification: As far as this study goes, the answer to the question "Is your stress affecting your future grandkids?" is "Possibly, if you are a pregnant woman." Since you're providing the environment for your embryo, your unborn child is like the stressed fly embryo and your future grandchildren are like the fly's children (though undoubtedly cuter). The paper doesn't address other mechanisms of epigenetic change that might apply to people other than pregnant women. But even for non-pregnant women, I would guess that your current lifestyle influences the kind of environment you'll provide for a future embryo.

*******

Seong, K., Li, D., Shimizu, H., Nakamura, R., & Ishii, S. (2011). Inheritance of Stress-Induced, ATF-2-Dependent Epigenetic Change Cell, 145 (7), 1049-1061 DOI: 10.1016/j.cell.2011.05.029

To Visualize Dinosaurs, Scientists Try Paint-by-Numbers

Now that we know some dinosaurs had down or feathers instead of the scales we used to imagine, there are intriguing new questions to be answered. Did forest-dwelling species use patterned feathers for camouflage? Did other dinosaurs use flashy colors for communication or courtship, like modern birds do? Using new imaging techniques, scientists are beginning to color in their dinosaur outlines.

In previous studies, researchers have scoured fossils of dinosaurs and early birds for melanosomes, structures in cells that hold the pigment melanin. (Despite the range of colors in our eyes, fur, and skin, most animals only produce one pigment: the brownish melanin. Blues and greens can be created by light-scattering tricks.) The shape of a melanosome can tell researchers what type of color it was responsible for, from black to yellow to red. But melanosomes, like other non-bony structures, break down over time and are hard to find in fossil form.

Instead of searching for the cellular packages that held pigments, Roy Wogelius and other scientists at the University of Manchester decided to search for traces of metals. Elements such as copper, zinc, and calcium bind to melanin while an animal is alive. Though the melanin decays over time, the metals remain behind.

The researchers used a new imaging technique that's sensitive enough to find and map trace distributions of elements. They first scanned samples from non-extinct species, including a fish eye, bird feathers, and a squid. The distribution of copper turned out to correspond with the most pigmented areas in the animals--the squid's ink sac, for example, was highly coppery.

Then Wogelius and his colleagues turned their scanners to fossils, including Confuciusornis sanctus, an early bird that lived with the dinosaurs. (Confuciusornis was discovered in China and named after who you'd think.) The researchers created a map of copper distribution in the fossil, which you can see below. Copper is shown in red.

Based on their map, they could then sketch a shaded-in Confuciusornis.

This technique doesn't yet tell us what colors Confuciusornis wore, only where its darkest feathers were. Those areas could have been black or brown or red--or the bird could have colored its feathers through its diet, as a goldfinch or a cardinal does today. Since that kind of coloration doesn't involve melanin, it would be invisible to these scans. But as technology improves and becomes more sensitive to the microscopic tidbits of information hidden in fossils, we may be able to find out what dinosaurs really looked like. We might be able to discard the green and brown lizards plodding through today's books, like the sepia-toned photographs of the past, with something much more lifelike.

To Live Longer, Be a Happy Ape

Orangutans that achieve their goals, enjoy swinging with others, and always look on the bright side of the banana have longer lifespans than those who merely mope around the zoo. That's the conclusion of a long-term study of over 180 captive orangutans. The unhappy apes died sooner, and the happy apes lived to gloat about it.

So it wasn't surprising that more male orangutans died during the course of the study. But the researchers also found that orangutans rated as happier at the beginning of the study were less likely to die over the seven years that followed.

One standard deviation in happiness, they found, was worth about five and a half added years of life. That means the difference between a pretty happy orangutan and a pretty unhappy orangutan is 11 years of living--no small change when you can only hope for 30 to 35 years to begin with.

What could cause unhappy apes to die younger? One possibility is that apes appearing less happy are already ill in some subtle, pre-symptomatic way. Another explanation is that a positive attitude evolved through sexual selection, like a set of showy tail feathers, as a signal to potential mates that Suzy or Sammy Sunshine has good genes. (Though being able to live into old age presumably isn't as important to potential mates as just living long enough to make some baby apes.)

A third possibility is that unhappy orangutans are experiencing more stress in their life, or have a poor ability to handle stress. Our bodies react to stressors by activating a hormonal system that gears us up to fight or flee whatever real or figurative predator is chasing us. It's a survival mode in the short term, but keeping that mode switched on in the long term is damaging to our bodies. Unhappy apes may have their lives shortened by stress.

The authors note that in orangutans, as in humans, happiness doesn't rely on outside circumstances. Part of it is inherited: you're born with your personality. But genes aren't fate, and aiming for a positive attitude--or the fruit on the high branch--might keep you swinging around the jungle into old age.

Weiss, A., Adams, M., & King, J. (2011). Happy orang-utans live longer lives Biology Letters DOI: 10.1098/rsbl.2011.0543

Alexander Weiss at the University of Edinburgh and his colleagues collected data on captive orangutans in parks around the world. At the beginning of the study period, employees at each zoo who were familiar with the orangutans there rated the apes on their apparent happiness. Questions included how often each orangutan seemed to be in a positive or negative mood, whether it enjoyed social interactions, how well it was able to achieve its goals, and "how happy [raters] would be if they were the orangutan for a short period of time."

Over the next seven years, the researchers kept track of which orangutans had died. Though orangutans in captivity rarely live past their 30s, their aging process is similar to humans'. And, as in humans, females tend to outlive males.

So it wasn't surprising that more male orangutans died during the course of the study. But the researchers also found that orangutans rated as happier at the beginning of the study were less likely to die over the seven years that followed.

One standard deviation in happiness, they found, was worth about five and a half added years of life. That means the difference between a pretty happy orangutan and a pretty unhappy orangutan is 11 years of living--no small change when you can only hope for 30 to 35 years to begin with.

What could cause unhappy apes to die younger? One possibility is that apes appearing less happy are already ill in some subtle, pre-symptomatic way. Another explanation is that a positive attitude evolved through sexual selection, like a set of showy tail feathers, as a signal to potential mates that Suzy or Sammy Sunshine has good genes. (Though being able to live into old age presumably isn't as important to potential mates as just living long enough to make some baby apes.)

A third possibility is that unhappy orangutans are experiencing more stress in their life, or have a poor ability to handle stress. Our bodies react to stressors by activating a hormonal system that gears us up to fight or flee whatever real or figurative predator is chasing us. It's a survival mode in the short term, but keeping that mode switched on in the long term is damaging to our bodies. Unhappy apes may have their lives shortened by stress.

The authors note that in orangutans, as in humans, happiness doesn't rely on outside circumstances. Part of it is inherited: you're born with your personality. But genes aren't fate, and aiming for a positive attitude--or the fruit on the high branch--might keep you swinging around the jungle into old age.

Image: harrymoon/Flickr

Weiss, A., Adams, M., & King, J. (2011). Happy orang-utans live longer lives Biology Letters DOI: 10.1098/rsbl.2011.0543